Idiocy for All and the Rise of International Large Scale Educational Assessments

Almost any education-related topic seems to turn into an overheated debate, provoking very strong gut reactions and diminishing any hope for productive discussions that engage in careful analysis of contrasting perspectives and forms of evidence. This is certainly the case with International Large Scale Educational Assessments (ILSEAs), like PISA or TIMSS, which lack nuanced discussions and methodic analyses of their role in improving student achievement.

When reading major publications about the latest results of one of the many ILSEAs – research articles, newspapers, or blogs – it is clear that these assessments are often linked to the search for answers to various educational problems. However, there is little consensus among stakeholders about the policy value and relevance of ILSEAs. In particular, are the results of ILSEAs being used by policy-makers to revise, plan, and execute educational reforms? What changes in national education policies and practices, if any, have been made in countries as a result of ILSEAs?

To answer these questions, we analyzed over a hundred research articles that narrowly focused on these questions and surveyed 90 scholars asking the same questions. Our research shows that it is almost impossible to establish any causal or direct relationship between ILSEAs and changes in educational policies. Nonetheless, we found very strong arguments made by researchers, academics, and policymakers asserting the existence of a direct relationship, although a caveat is needed. Some of the studies found a positive or beneficial relationship between ILSEAs and changes in educational policies, while others saw a negative relationship. (We will get back to the results of our study shortly).

As we already said, we should not be surprised by the polarization of our results. Politicians, researchers, teachers, administrators, students, and their families have very strong opinions and perspectives about what works in education, what needs to be fixed, and what the “fix” should be. Each of these stakeholders attacks the other using several arguments, but two of the most common are “You are an idiot; everybody agrees with my idea, which is just good common sense” coupled with a dismissive comment, “your idea lacks any evidence, and even if you have some, it is not as strong as mine.” In fact, it seems that when discussing education, the tendency to be idiotic is quite common, and in many cases proudly so. By “idiotic,” we are not referring to the common usage of somebody who is not very clever, but in the original meaning of the word in Ancient Greek, as Walter C. Parker (2005) explains:

…idiocy shares with idiom and idiosyncratic the root idios, which means private, separate, self-centered – selfish. “Idiotic” was in the Greek context a term of reproach. When a person’s behavior became idiotic – concerned myopically with private things and unmindful of common things – then the person was believed to be like a rudderless ship, without consequence save for the danger it posed to others. This meaning of idiocy achieves its force when contrasted with politës (citizen) or public. Here we have a powerful opposition: the private individual versus the public citizen (p. 344).

To understand the extent to which educational stakeholders are exhibiting idiotic attitudes towards ILSEAs, and education reforms more broadly, one would first need to examine the discourse around, and reactions to, ILSEAs and their results. Research on ILSEAs has primarily focused on student performance and disparities in outcomes by gender and socioeconomic status, with more limited research on stakeholder attitudes. Our recent research (reference), funded by the Open Society Foundation, sought to fill this gap by looking specifically at whether national-level educational stakeholders (e.g., ministries of education, national policymakers, other national political and social actors) value these types of international measures of student attainment and to what extent they have integrated ILSEAs into their work at the national level.

ILSEAs as Tools of Legitimation

Our exploratory review of the ILSEA literature found that policymakers appear to be using these assessments as tools to legitimate existing or new educational reforms, although there is little evidence of any positive or negative causal relationship between ILSEA participation and reform implementation. That is, educational reform efforts have often already been proposed or underway, and policymakers use ILSEA results as they become available to argue for or against new or existing legislation.

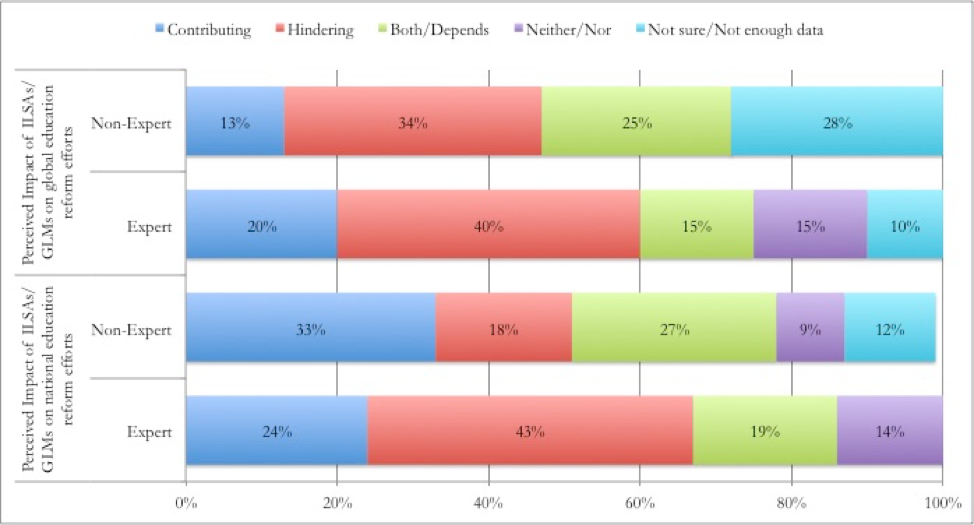

At the same time, results from our two surveys of ILSEA experts, policymakers, and educators pointed to a growing perception among respondents that ILSEAs are having an effect on national educational policies, with 38% of all survey respondents stating that ILSEAs were generally misused in national policy contexts. Interestingly, experts are generally more critical in their assessment of ILSEAs compared to non-experts, with 43% arguing that ILSEAs are often being misused. They explain that policymakers have little understanding of ILSEAs and use them for “ceremonial effects,” while at the same time arguing that these assessments are too broad and decontextualized to be used meaningfully in national contexts. Based on their professional and personal experiences, respondents were divided over whether ILSEAs actually contribute or hinder national education reform efforts.

Figure 1. Survey respondents’ perceived impact of ILSAs/GLMs on national and global education policies

Note: Includes responses from both the expert and non-expert surveys.

Perhaps the most significant finding associated with the use of ILSEAs in the literature we reviewed is the way in which new conditions for educational comparison have been made possible at the national, regional, and global levels. Arising from large-scale international comparisons, these new conditions have given rise to many myths about education – whether presumed poor performance of all public schools is due to teacher (in) effectiveness, or the relevance of a causal link between ILSEAs results and economic growth, or, in more general terms, impeding education “crises” worldwide – which are increasingly taken as scientific truth (see Rappleye and Komatsu’s (2017) recent commentary about “flawed statistics” and new “truths” in education policymaking). These conditions also create an assumption of the existence of single and globally applicable “best practice” or “best policy,” which can uniformly inform policy-making and improve education in local contexts. From our perspective, the challenge is to avoid the illusion of certainty that any quantitative measure provides. Granted the challenge is not easy to overcome because as Nobel Laureate Simon Kuznets (1934) affirmed:

With quantitative measurements especially, the definiteness of the result suggests, often misleadingly, a precision and simplicity in the outlines of the object measured. Measurements of national income (and we can add, of education) are subject to this type of illusion and resulting abuse, especially since they deal with matters that are the center of conflict of opposing social groups where the effectiveness of an argument is often contingent upon oversimplification. (pp. 5-7)

Our research shows that, on the one hand ILSEAs have the potential to provide governments and education stakeholders with useful and relevant modes of comparison that purportedly allow for the assessment of educational achievement both within cities, states, and regions, and between countries. On the other hand, using idiotic lenses to analyze ILSEAs’ results – the good, the bad, and the ugly – without considering the strong influence of unequal educational opportunities in various contexts or acknowledging broader political or economic agendas driving the production and use of ILSEAs in education – is dangerous.

Generating oversimplified narratives using ILSEAs, disregarding the different contexts and multiple obstacles, showing a lack of concern for the educational opportunities and rights of millions of children, and focusing all the energies on justifying your own opinions – while quickly discarding any counterevidence to legitimate your interests and benefits – is a genuine form of educational idiocy. The best defense against educational idiocy? We already have discovered it, discussed it, experimented with it, assessed it, and considered the evidence: avoid the exclusive reliance on simplistic quantitative measures in determining education outcomes, shift attention away from short-term strategies designed to quickly climb the ILSEA rankings, implement proven strategies to reduce inequalities in opportunities to improve long term outcomes. Above all, stop pushing for education reforms based on a single, narrow yardstick of quality.

More than ever, we need to consider multiple types and sources of data, we need to explore more meaningful ways of reporting comparative data, we need to recognize the importance of the civic and public purposes of education, and we need to involve our diverse communities -parents, educators, administrators, community leaders, teacher union representatives, and students – in a public dialogue about what education is and ought to be about. Overcoming education idiocy would thus entail a return to the larger and more important educational questions than how a country performs on international large-scale assessments.

Acknowledgements

A shorter version of this essay appeared on Education International’s Worlds of Education blog.

References

Fischman, G. E & Topper, A. with Silova, I. & Goebel, J. (2017) An Examination of Perspectives and Evidence on Global Learning Metrics [Final Report for the Open Society Foundation]. CASGE Working paper 2. Tempe, AZ: Center for Advanced Studies in Global Education.

Fischman, G. E. (2016, May 12) The simplimetrification of educational research. World of Education, Blog of Education International.

Kuznets, S. (1934). National Income, 1929-32. U.S. Congress.

Parker, W. C. (2005). Teaching against idiocy. Phi Delta Kappan, 86(5), 344-351.

Rappleye, J. & Komatsu, H. (2017, July 6). Teachers, “Smart People” and Flawed Statistics: What I want to tell my Dad about PISA Scores and Economic Growth, World of Education, Blog of Education International.